Sovereign Cloud Blog

Sovereign Cloud is built to serve industry-specific customers that host regulated workloads. Sovereign cloud includes embedded cloud security services, data protection and availability services, compliance services, physical security controls, security clearances for operators, additional network segmentation and restricted and regulated network access. Sovereign cloud also provides data sovereignty where all customer data, metadata, and escalation data reside on sovereign soil.

AGI, Simplified!

Emerging landscape of technologies continue to impress us with the advancements and general acceptance of the ‘Known unknowns’ of AI/ML making headways to the new paradigm called AGI, Artificial General Intelligence. AGI is all about the ability of an intelligent agent or machine with the learning and reasoning powers of a human mind engineered to understand or learn any intellectual task that a human being can, perceives its environment, takes actions autonomously in order to achieve goals, and may improve its performance with learning or may use knowledge. No, we are not talking science fiction but a near real stream of computer science being built on cognition and Intuition to autonomous learning, acquiring knowledge, reasoning and ability to understand something instinctively via abstract thinking as a collective ability. To drive the point for example, even specific straightforward tasks, like machine translation, require that an intelligent machine read and write in both languages (NLP), follow the author's argument (reason), know what is being talked about (knowledge), and faithfully reproduce the author's original intent (social intelligence). All of these problems need to be solved simultaneously in order to reach human-level machine performance.

Application Modernization, Simplified!

Thinking about the Application Modernization is somewhat similar to renovating your old house with a structural and functional overhaul using latest tools and techniques, this is not a new concept since most of us have been dealing with it from the first application we deployed and hosted as part of constant evolution and keep it running and relevant. Today’s Application Modernization is critical part of the digital transformation vision and strategic approach to bring about changes in multiple fronts such as platform, framework, functionality, process workflows and/or interoperability with the legacy application etc. depending on the scope and priority the application modernization initiative might get road mapped into several sprints or phases to ensure minimal disruption to the stakeholders of the application.

Algorithms, Simplified!

In modern computing, algorithms are used widely ranging from forensics, forecasting ,financial modelling and futuristic predictions while using emerging technologies such as Machine Learning and Data Science. In true sense they extend the business logic given the set of iterative computations and enable advanced analytics with speed and accuracy.

5G,Simplified

Greater bandwidth, faster connectivity or download speeds, and ultra-low latency are the promises of 5G, the revolutionary fifth generation of cellular network that is 100 times faster than the current 4G network serving us today. According to GSMA, 113 mobile operators have already launched a 5G network in 48 countries and are predicted to have more than 1.7 billion subscribers worldwide by 2025.

Edge Computing, Simplified!

A little twist to the original quote of famous Everest climber Jim Whittaker, rather apt when it comes to distributed computing reaching the edge. Today’s cloud computing strategy now spans beyond hybrid clouds and is even leaving the bus of hypervisors and prefers bare-metal deployments for reusable resources via serverless or containers lead microservices running on the edge cloud. The monolithic trend is breaking at a faster pace to augment near-shore points of presence via edge enablement from static workloads to real-time edge processing workloads.

IPv6, Simplified!

As per the internet usage stats, Internet users skyrocketed in the last decade at an unprecedented growth rate of over 1300%, almost 65% of the world population are now active users of the internet. In India alone, the internet users growth is whooping 11,000% from the year 2000 till 2021. Yes, you read it correctly, it’s 11,200% to be precise as quoted. The same is the case with connected devices, interestingly the connected devices are now seen generating more data than the traditional application transactions. Statista mentions by 2025, there will be more than 75 billion Internet of Things (IoT) connected devices in use, nearly a threefold increase from the IoT installed base in 2019.

Confidential Computing, Simplified!

The world is becoming more and more reliant on digital infrastructure as it helps businesses and ecosystems transform their services and operations through the steady adoption of digital strategies towards cloud-native applications, automation, and emerging technologies such as AI/ML, IoT and Edge Computing, etc. making digital transformation (DX) as the core of their organizational imperatives.

MLOps, Simplified!

ML’ stands for Machine Learning, the fastest developing technology under the Artificial intelligence umbrella, expanding at an unprecedented pace with a higher adoption curve and number of deployed use cases. Whereas ‘Ops’ is Operations that manages and enables the underlying infrastructure, services, and support processes to successfully run the toolsets and application workloads. In our last interaction, we learned about DevOps, and a few months ago we did touch base on Machine Learning, as we discussed these two concepts individually, we must also look at how Machine Learning is leveraging DevOps framework for development, deployment, scaling, and retraining ML models, thus the name MLOps.

DevOps, Simplified

Most of the emerging technologies and SaaS-based solutions today practice rapid application development for enablement of use cases via agile frameworks fueled by DevOps, the proven methodology for system development life cycle. It combines Development + Quality assurance and IT operations as a single seamless function managed by specialized automation, self-service and mostly leveraging varied open-source tools, often called toolchains. I believe it emerged in the 1990s as part of the ITIL process and inherited best practices from PDCA, Lean, and/or Agile approaches as well as believing in continuous development and integration as a core principle.

Augmented Reality, Simplified

Augmented Reality (AR) is real, even closer to reality. Using augmented elements layered atop the real-life visuals along with a sound or other sensory stimulus, augmented reality enriches the value of our perceptions, interactions, and overall experience. There are many success stories and use cases of AR that are becoming part of our lives seamlessly transforming the way we learn, interact, and experience the world around us.

Digital Payment Simplified

Digital payments today is the main reason for thriving the global digital economy, with rising userbase of smart phones, mobile / internet banking, e-commerce and improved internet penetration world has stated witnessing downward trend of cash in circulation after decades of year on year increase. Fundamentally, Digital payments must be faceless, cashless and via completely electronic means of end to end transaction without compromising availability, provenance and traceability, repudiation and of course information security. Since last decade digital payments have garnered lot of interest and adoption from the users and positively influenced the digital agenda for enterprises and governments

Database Evolution Simplified

Databases are the core elements of any application environment, like the distributed applications, they too keep evolving to solve the fundamentals like availability and integrity of data and keep running under any adverse situations. Not so long ago that we have had the BCP/DR documents mentioning the terminologies like failover, fallback, primary, secondary databases, and log shipping, etc. All of these were nightmare words for database administrators. In case of an eventuality that plays out a disaster, they have to work round the clock to get their databases, indexes, and logs to a running state without loss of any data. This would take from a few minutes to many hours or even a few days. The database OEMs vendors then became creative and build ancillary products or features that held were sold to help users protect their data against such situations to help faster database recoveries, etc.

Quantum Computing, Simplified!

Modern workloads are changing the dynamics of computing capabilities due to a surge in data from devices, sensors, and wearables beyond the traditional applications. Most of the complexes have been addressed via High-Performance Computing which can be also called the epitome of digital computing at present. Yet the expectation is to further cross the boundaries via different approaches of using quantum-mechanical phenomenon using superposition and entanglement for performing the computations and changing the notion of computation itself.

High-Performance Computing, Simplified!

Modern application workloads involved in advanced scientific, engineering, and technology domains are complex and demand high performance, power, and accuracy for problem-solving on large datasets at very high speed. Each innovation for scientific, industrial, and societal advancements now leverages Artificial Intelligence, the Internet of things, data analytics, and simulation technologies as de facto standards in various scientific and industrial use cases. In the areas of weather forecasting, stock market trend analysis, animation graphics rendering, fraud prevention in financial transactions, aircraft design simulations, etc one crucial commonality is the ability to process large data in real-time and provide insights or outcomes almost instantaneously or near real-time. On the other hand drug discovery, scientific simulations involving large scale calculations, Astrophysics or space sciences, molecular modeling, quantum computing, climate research, cryptography or other computational sciences, etc would require a wide range of computationally intensive tasks that can run for days and months to complete, commonly such requirements demand nothing less than High-Performance Computing (HPC) interchangeably also called as the Supercomputer. The cost of obtaining and operating a supercomputer and its custom software may easily run into the millions of dollars, the technology remains far beyond the financial reach of most enterprises. Thus cluster-type HPCs, using relatively inexpensive interconnected computers running off-the-shelf software, are making waves due to ease of deployment and affordability yet to provides supercomputing capabilities is now possible.

Privacy Enhancing Technologies, Simplified!

The modern day battle of protecting privacy of user data is never ending and take great deal of efforts to win against websites or applications installed and running on your laptops, tablets, mobile phones and wearables. Although there is no silver bullet to achieve complete protection, the Privacy Enhancing Technologies ( Read, PETs) offer strategies and solutions that can help users regain some of their privacy that has been lost being online and connected to internet, the objective is to minimize possession of personal data without losing the functionality of usage and effectively control personal data sent to, gathered and used by, online service providers and application owners, merchants and or other online users / entities.

Distributed File Systems, Simplified!

File Systems are responsible for organizing, storing, updating and retrieving the data. File systems may by design have unique approach or structure to provide data availability, read and write speed, flexibility, security, data size or volume it can handle or control and all users and storage of the data is located on a single system.

Kubernetes Clusters, Simplified!

Today we don’t need an introduction to Kubernetes, most of the distributed and largescale engineering deployments on cloud use microservices architectures and deploy containerized workloads on Kubernetes. The challenge is not one size fits all, thus sizing of the cluster and resource mapping is a tight rope walk unless one understands the underlying factors.

Everything as a Code!

Code is and will always be at the core of technology and engineering, it might be a simple text files, hardwired device drivers, software defined configurations or even distributed repositories of reusable yet connected components that remains still at the heart of “Everything” we run as software..

Video Streaming, Simplified!

It won’t be prudent to start the discussion on Video Streaming without mentioning YouTube, with over 2 billion monthly active users with over one billion hours of YouTube content viewed per day and 500 hours of content uploaded to YouTube, it becomes a testimony in itself on the video streaming and content delivery app on the Internet crossing between $9.5 billion and $14 billion revenue in 2018.

World of CPU GPU & FPGA, Simplified!

Since the invention of abacus, the evolution of calculations to computing is aligned to the human inventions since they used abacus for the operation of addition and subtraction; however, this primitive device underwent extensive transformation the time has witnessed. We have seen the most relevant cousin of abacus transforming into calculators as special purpose devices but the transformation paradigm of making most complex calculations kept the ball rolling when Blaise Pascal (1623–1666) invented the first practical mechanical machine that had a series of interlocking cogs / gears that could add and subtract decimal numbers.

Getting Your Microservices Strategy right!

In my article in 2015, we have touch based on the fundamentals of Micro-Services, the momentum has picked up and that warrants revisiting the approach and strategies of microservices that are transforming the landscape of the application architecture and consumption thereof redefining the design, development, data and delivery aspects even today at much more larger way. It is challenging the legacy approach of monolithic architecture by paving way for distributed, loosely coupled, inter-connected and highly scalable ecosystem of functionalities called as fine-grained services. The strategy is to move out capabilities vertically, decouple the core capability with its data and redirect all front-end applications to the new APIs, in this era of microservices…

Five Dimensions of Digital Transformation..

So, What’s the difference between Digital optimization and Digital transformation? Many of us are interchanging Digital transformation as it relates to improved productivity, greater customer / user experience and even greater revenue generation of existing streams, but that’s not Digital Transformation. It is Digital Business Optimization.

Machine Learning ecosystem, Simplified!

The recent past we have been hearing lot of buzz words in AI/ML domain and many new high-impact applications / use cases of Machine Learning were discovered and brought to light, especially in healthcare, finance, Video/pattern/object/speech recognition, augmented reality, and more complex analytics and cognitive AI applications. Over the past two decades Machine Learning has become one of the mainstreams of emerging technology and with that, a rather central, albeit usually hidden, yet impact part of our life. With the ever-increasing volume and variety of data (Read, speech/text and video) becoming available there is good reason to believe that smart data analytics will become even more pervasive as a necessary ingredient for technological advancements.

About Blockchain & DLT!

According to IDC Worldwide spending on blockchain solutions is forecast to be nearly $2.9 billion in 2019, an increase of 88.7% from the $1.5 billion spent in 2018, according to a newly updated Worldwide Semi-annual Blockchain Spending Guide. IDC expects blockchain spending to grow at a robust pace over the 2018-2022 forecast period with a five-year compound annual growth rate (CAGR) of 76.0% and total spending of $12.4 billion in 2022.

THE PERSONAL DATA PROTECTION BILL, 2018, in a nutshell!

Personal Data Protection Bill, Aadhaar (Amendment) Bill, and DNA Technology Regulations Bill are part of a 25-page bulletin, with a list of bills proposed to be introduced in the Budget session, uploaded on the Lok Sabha website on Friday, 21st June 2019.

Cognitive AI…Simplified!

During few recent interactions with AI startups and advisors the term “Cognitive computing” was used and often used as an alternate term for AI. Let me tell you that the nuances of both these terms are as similar to the difference between data analysis and data analytics. While data analysis refers to the process of compiling and actually analyzing data to gain meaningful insights to support decision making, whereas data analytics is a superset that includes the tools and techniques use to do so.

Modern CDN, Simplified!

Content Delivery Network (CDN) has been around for more than a decade now, it started with a system of distributed servers (Read, PoPs ) that used to deliver pages and other web content to a user faster from the local cached copies of the content based on the geographic locations of the user from the nearest content delivery server rather than , the origin of the webpage / source server hosted faraway. CDNs were proposed to solve content delivery bottlenecks, such as scalability, reliability, and performance.

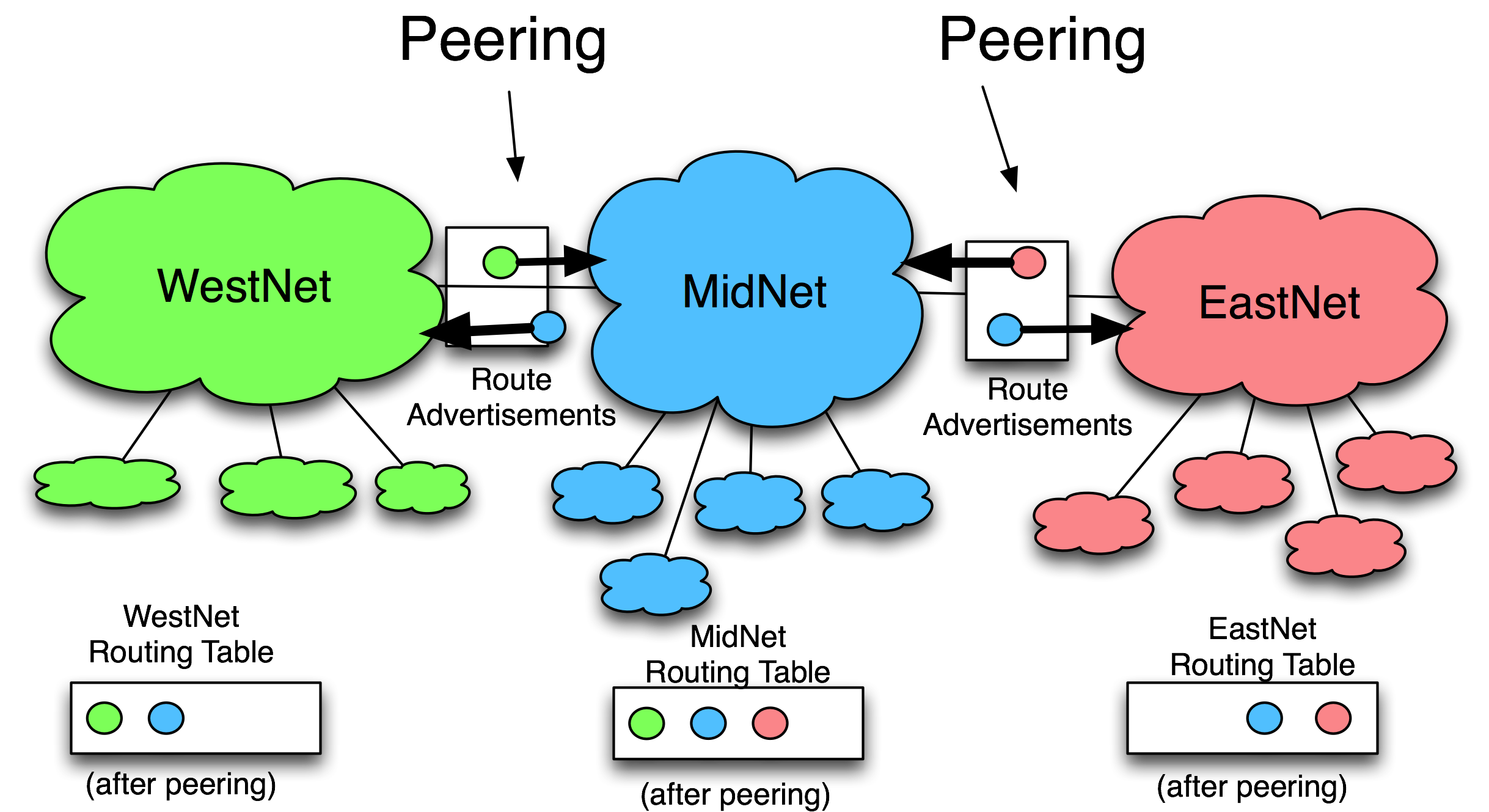

Internet Peering, Simplified!

Today’s digital world rests on the fundamental premise of connectivity. According to Statista, global IP data traffic was at a staggering rate of 121,694 petabytes per month in 2017, further it is expected to reach 278,108 petabytes per month. This is the reality of connected global economy itself catering to a digital mobilized consumer that wants access to content and interact with each other, applications that interconnect at every layer and IoT devices that are extending our perimeter and reach.

CEPH Unified Storage, Simplified!

In todays era of the data dictating the dynamics of the applications, solving usecases of mobility and scale for the interconnected digital world, data storage is becoming a challenge for those who just keep relying on the legacy storage approaches and solutions that maintain silos of file systems, object storage ( read, file servers) and block storage systems. Most of the organizations thus forgot to Innovate since innovation demands reinvesting in the completely new set of hardware and network gear without guaranteed inter-operatability and the lock-in of the existing legacy.

Hyperledger, Simplified!

Hyperledger isn’t a tool or a platform; it’s the project itself. Often, it’s referred to as the umbrella. Hosted by the Linux Foundation, Hyperledger now has more than 270 members. Various industries are represented, including aeronautics, finance, healthcare, BFSI / NBFCs, supply chain, manufacturing and technology. It was announced and formally named in December 2015 by 17 companies in a collaborative effort created to advance blockchain technology for cross-industry use in business.

Looking forward, Beyond the Cloud!

The cloud adoption is now trivial to almost all business verticals, the trends suggest organizations, OEMs and developer communities are looking way beyond cloud in next five years. In the coming months and years, we will see massive reorganization of the "Cloud", the future of cloud will be an augmentation of the technological breakthrough that challenges the very assumptions around the cloud stack and the cloud native applications, devices and technologies truly a transformation of the cloud enabled by cloud!

Cloud Migrations, Simplified!

The digital transformation is driving the cloudification, and cloud migrations are the core activities every enterprise is grappling with, since long time there was a debate about cloud adaptation enough water has passed under the bridge and most enterprises now have some or the other workload footprint in the cloud and others are following the suite to catchup. The Cloudification was easier for those who directly started the cloud computing as their only choice and built all applications and associated IT environments and did not have any baggage of legacy systems to worry about. However, for others this still remains humungous task to strategize and migrate. I am making an attempt to help organize few thoughts and strategies via this article about cloud migrations, what's, how's and when's? (We agree Why is already answered!)

What is driving the Fourth Industrial Revolution (4IR)?

The fourth industrial revolution, I4R is a reality and encompasses multi-fold impact to our lives actually due to digital revolution, but the phrase 'fourth industrial revolution' was first used in 2016, by World Economic Forum, which he describes how this fourth revolution is fundamentally different from the previous three, which were characterized mainly by advances in technology. However, this fourth revolution, impacts our lives much more than before transforming each and every aspect of the digital world. The endeavour where advanced digital technologies such as big data, artificial intelligence, robotics, machine learning and the internet of things interact with the physical world and create an ever lasting impact to our health, our interaction with one another and how we work or do business, In fact the very definition of work itself is getting redefined as our contribution to social, economic and environmental aspects or the outcomes we desire.

FWaaS, Explained!

FWaaS, Firewall as a service is simply network zoning with firewall partitioning that is virtually isolated and logically grouped policies and rules thereof, each virtual Firewall has multiple such policies tied down to multiple rules (Read, Access control lists, i.e ACLs) thus making it a great proposition as an optimized service running on physically shared devices or cloud instances going completely virtual in a revolutionary way of delivering firewall and other network security capabilities as a cloud subscription service.

Hyperscale or Hyperconverged Infrastructure, Simplified!

Modern application and workloads are much more demanding in nature and getting liberated from the clutches of legacy layered stacks. The evolution of the compute following data, runtime execution of code and dynamic assemble of IT infrastructure resources making everyone rethink on their strategies of application deployments. Each and every element of the ecosystem is getting challenged to solve the issues learned in the past. Be it availability, scalability, multi-tenancy, security, integrity etc of the workloads running from any location, cloud, edge around the globe.

Serverless Computing, Simplified!

No kidding, The serverless is actually not actually serverless, it certainly needs the underlying infrastructure such as compute, memory and storage but the pains of managing this infrastructure and capacity thereof is totally outsourced and managed by your cloud service provider, It is thus all about compute runtime, used for modern distributed applications that significantly or fully incorporate third-party, cloud-hosted applications and services, to manage server-side logic and state and most of times don't even provide storage. (its persistent cache from microservices / neighboring pods that's largely replacing the working data and stickiness to transactions)

Data Modeling, Simplified!

A data model is a design for how to structure and represent information driven by the data strategy and thus provides structure and meaning to data.

As a first step a "Data model theory" is coined and then translated into a data model instance. Such model of required information about things in the business or trade, usually with some system, context in mind, further

GDPR - Clock is ticking!

Come 25th May 2018, companies that have customers in EU and/or part of value chain that caters to EU customers will need to be on their toes, those who haven't been preparing for the past couple of years will now face challenges.

Data Archival-Strategic Importance!

While we continue to discuss, datafication, role of active data and data protection strategies around it, it is imperative to revisit the Data Archival and governance. Most of the businesses today have one way or another to maintain their data archives but in reality, the effectiveness of archival and qualitative approach on archival still stone-age. Don't believe me, let us dwell more and talk about strategy, best practices and techniques deployed for data archival. The topic warrant this discussion due to GDPR and associated impact on organizations who still might be struggling to meet both ends meet! i.e. Governance and BAU ( Read, business as usual) ..

Protecting the Active Data – Strategic Insight!

Growing concerns on the data governance and the recent breaches call out for insight into the most valuable asset for any organization, Data.

Although data governance spans out to availability, usability, integrity and security of the data as a whole, we would touch upon the “active data�? of the organization and dwell upon the key challenges of managing this active data, key challenges and strategies around the risk management thereof. The ILM (Information lifecycle management) is a well-known framework explaining the active data and role of the same in overall digital asset management.

BI, Simplified!

Business intelligence (BI) has two different meanings related to the use of the term "intelligence", first is the human intelligence capacity applied in business affairs/activities and the second relates to the intelligence as data assets i.e. information valued for its currency and relevance.

Datafication...an outlook at our data assets!

Datafication (also referred as Datafy) is a modern technological trend turning many aspects of our life into computerised data and transforming this information into new forms of value, says Wikipedia. Today all aspects of our life is surrounded by multitude of technologies that generate data.

GDPR, Quick insight on the impact on APAC business entities!

What is GDPR?

The General Data Protection Regulation (GDPR) is a regulation to strengthen and unify the data protection of all EU citizens / residents by giving the choice and control of use of their personal data usage, processing and storage by the data controller (an organization that collects data from EU residents) or processor (an organization that processes data on behalf of data controller e.g. cloud service providers) or the data subject (person) is based in the EU. It also applied to organizations based outside the European Union if they collect or process personal data of EU residents. This regulation was adopted by European Parliament, the council of European union and European Commission on 27 April 2016. After two year transition period this becomes enforceable effective May 2018.

Fog computing, the strategic IoT gateway for "Cloud of Things"

The cloud computing is well adapted and enabling most of the innovative technologies today, I am attempting a compilation from various sources to bring a closer look at Fog Computing, a "Cloud of Things" i.e. ecosystem of Cloud computing and IoT Systems enabler. The edge computing is becoming more and more significant and with advancements in mobile computing and associated peer to peer social applications, drones, connected cars and augmented reality etc driving the agenda on how the data gets generated, processes (locally) and disseminated with decentralized way yet building up the knowledge repository centrally to draw insights from algorithms and AI/ML (read artificial intelligence and Machine learning) artefacts.

Firewall Best Practices to Block Ransomware Attacks and what has NxtGen done?

It is important to keep in mind that IPS, sandboxing and all other protection the firewall provides is only effective against traffic that is traversing the firewall and where suitable enforcement and protection policies are being applied to the firewall rules governing that traffic. So, with that in mind, follow these best practices for preventing the spread of worm-like attacks on your network:

Blockchain,“The Internet of Value"

Two key technologies are creating waves in the digital world towards transformation of the business, first the Bitcoins and another Blockchain. Both are correlated but not the same, Bitcoins is a worldwide cryptocurrency and digital payment system that offers distributed ledger system leveraging the Blockchain technology running transactions from multiple locations ( read devices, applications or micro services ) by multiple users and still maintains integrity of the transaction and value remittance thereof. The system is peer-to-peer, and transactions are capable of running independently between users, without an intermediary.

Hadoop File Formats, when and what to use?

Hadoop is gaining traction and on a higher adaption curve to liberate the data from the clutches of the applications and native formats. This article helps us look at the file formats supported by Hadoop ( read, HDFS) file system. A quick broad categorizations of file formats would be...

RANSOMWARE: What it is and how to deal with it

Almost every day we hear about cases of ‘Ransomware’ affecting running servers – an act we call digital terrorism. Let’s get a quick understanding of this risk and the preventive measures to mitigate it. Crypto-ransomware gains access to a target server and encrypts all the files on that server. It scrambles the contents of the file so that you can’t access them without a particular decryption key that can correctly unscramble it. This makes the users or the administrators of the server helpless, and there are no known tools that can detect the hashing algorithms and then decrypt them. A ransom is thus demanded in exchange for the decryption key.

Focus on the Fourth ‘V’ of the Big Data for EDH enablement!

Big data as defined in the Wikipedia, Big data is a term for large or complex data sets that are a constantly moving targets for the traditional strategies, tools for processing and managing them via traditional data processing applications which are inadequate to deal with them. Challenges include analysis, capture, data curation, search, sharing, storage, transfer, visualization, querying, updating and information privacy.